It's Docker-ing Time! Part 1

Intro and Musings

So when I first started this blog rambling™ about all sorts of nonsense™, I was fixated on Proxmox and LCXs. And man I went crazy over LXCs…how cool. Super lightweight, easy to deploy, etc etc. My LXC count grew and grew. Nginx Manager, Audio Bookshelf, Plex, Technitium (DNS Server) PiHole, etc etc. I realized I was relying too much on the tteck scripts (RIP man) and that whole community kind of…imploded. I also saw that many of the new apps I wanted to host didn’t have an LXC script and I started to see the cracks in the foundation of LXC.

Don’t get me wrong, I still think they’re great and I still use them. But I find myself not lighting up new ones and going another way. A buddy of mine that I work with was like, “why not use Docker?” And I was like…”I don’t know.” Cue the training montage.

Six months later, I have quite the docker deployment for my little self hosting environment, and lighting up new apps is very simple and I haven’t found an app that I wanted that isn’t “docker-ized” yet. Usually when I get excited about a technology or new shiny thing I’m using I just go off the cuff, and and start ramblin’. This felt different though. So when the itch too write got too strong to ignore, I took a step back. I watched some deep dive videos, took some courses and took a close look at my environment and realized I had fallen into some bad practices and did some housecleaning and fixing. Don’t get me wrong, everything was functional but I wanted to provide a practical guide that followed some best practices.

Disclaimer: You may have a better way. My explanations/musings may seem silly or too basic. This is how I understand it and implement.

What is Docker?

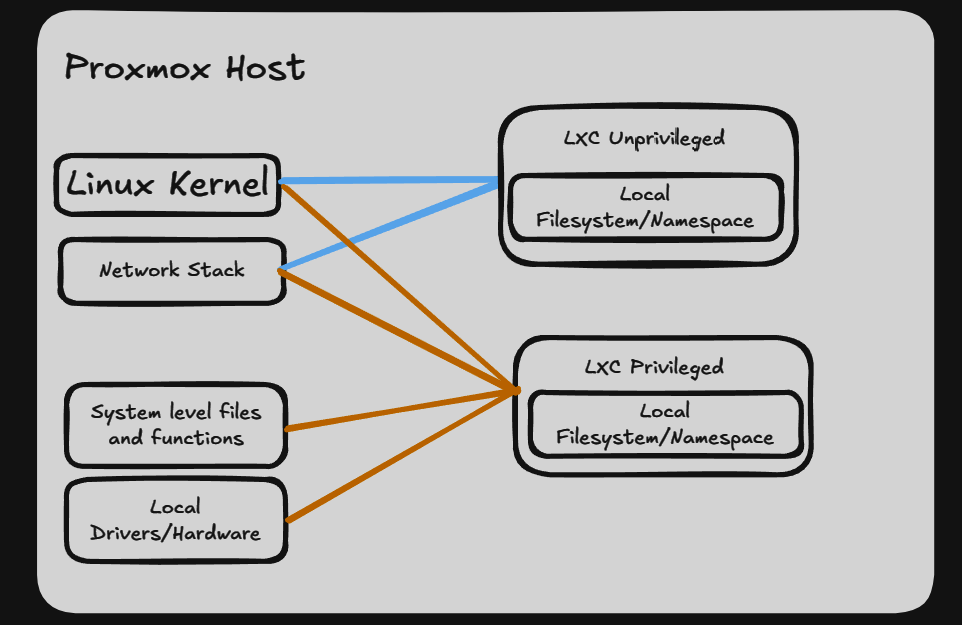

Contrary to the header here let’s talk about what an LXC is. It’s container based virtualization, but it’s tied tightly the host OS. It does have its own file system but it shares the local kernel, etc. By default, it is “unprivileged” which limits its blast radius, but it can also be set to “privileged” which grants it even deeper access to the host. (arguably dangerous) Regardless of the Linux Distribution the host is on, you can make a LXC with just about any other Linux Distribution due the the nature of the shared Linux kernel. Because of this, LXC’s start extremely quickly as they're not booting their own kernel or initializing their own hardware drivers.

You can still have unique networking, mount external file shares, etc etc. From the perspective of the LXC it’s a full OS with nearly all capabilities of a full OS. With Proxmox, you actually configure the network settings from the CT (container) GUI itself, and not in the CT directly. Every instance of a CT is unique and independent and takes up an IP on your network as well.

In fact, they look so much like a regular VM I tag them in Proxmox so I know what they are at a glance. CTs can be backed up, and even moved between different hosts in your cluster just like a VM. But because they share the local kernel, they must be shutdown briefly, the files/compute moved and then restarted on the other host. It’s fast, but there is a drop in service depending on the size of the CT.

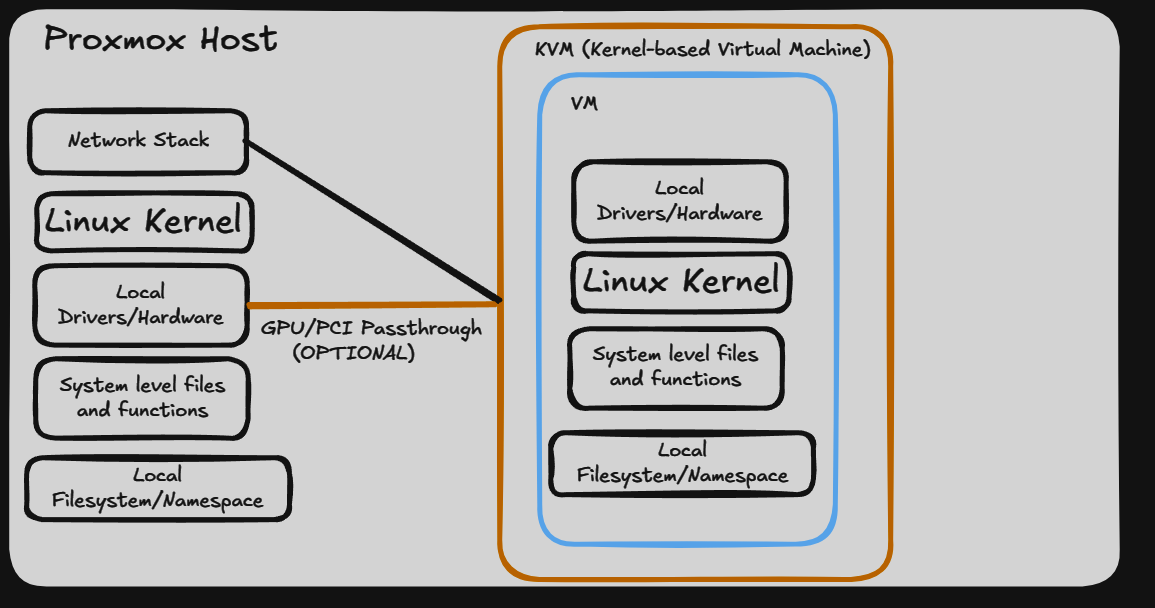

Now let’s talk about Docker. You install the Docker application on a separate VM, like Ubuntu Server. The difference is that a VM (virtual machine) is an island unto itself. It is totally separate from the host OS. Separate kernel, drivers, everything.

The network stack is edited in the VM, etc. If you need to, you can also “passthrough” physical devices to a VM like a GPU. It takes much longer to boot than LXC, but can be “live migrated” to another host as it does not rely on local resources.

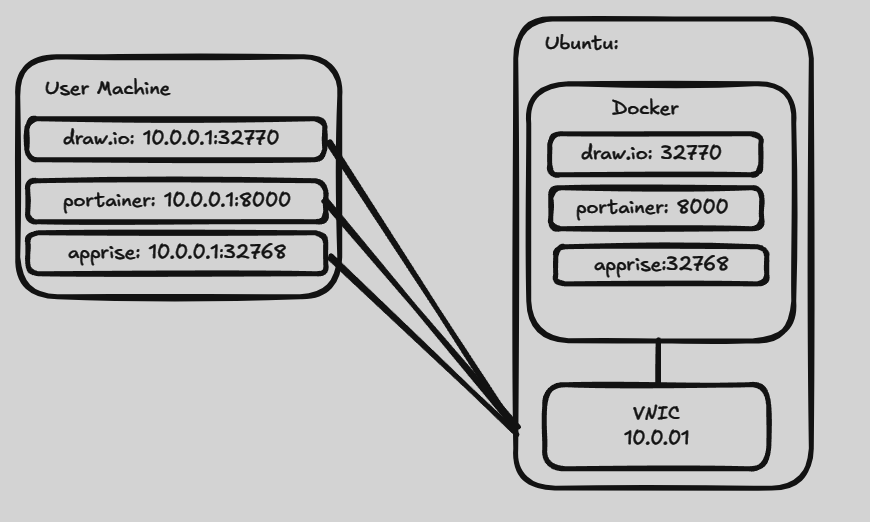

So with Docker, I want you to think about it like this. Imagine you install an application on your machine but you want to expose that application to everyone over the network via a web page. Multiple applications can be installed, and accessible over the same IP of the VM host, just on different ports. When you pair this with Nginx, wonderful things can happen.

Note: Dockers apps don’t HAVE to be network accessible but for this guide we will assume everything is reachable.

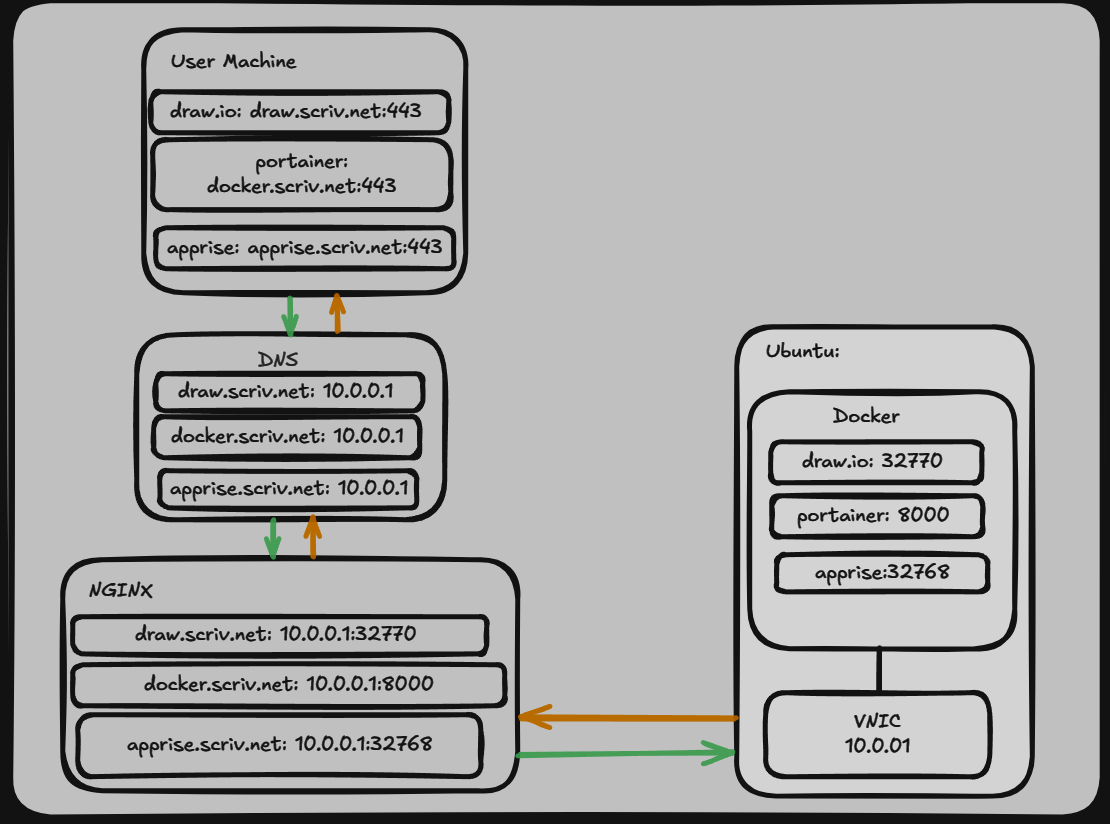

The image above shows what the end user would experience by default. In order to access the application they must go to the VM’s IP and port number. This is tedious though. Let’s add in something like Nginx to make this easier for the end user.

(Let’s be honest, the enduser is you. ;) )

Using Ngnix and local DNS we can make the end user experience much simpler. Using our proxy we also obfuscate where the site is located. You can also leverage Let’s Encrypt to get certs for the domain names. As luck would have it, I’ve already written a post about that.

It's a secret to everybody!

Docker Images

Let’s talk about applications. Say I want to install Audio Bookshelf on my Ubuntu Server. I have to find the source for the application, determine the dependencies, download multiple packages, etc etc. It’s really a pain. What if it uses a different version of PHP, or Python that I have installed? It can snowball from there into an unmanageable mess.

A docker image is the solution for this. Someone builds a docker file with the application and all dependencies. The base OS can be a different Linux distribution as well. When run as a container, it’s totally independent of the local system and nothing other than Docker itself needs to be installed. The container is also immutable. That means any new data is stored in memory, but on a restart of the container everything vanishes and you’re back to how it was when you started it the first time.

(there are exceptions to this, but it doesn’t matter for this guide)

To make our data permanent, we need to use “docker volumes” where we essentially mount external folders into our docker startup configuration. We can save data like app configurations, and even mount external shares for application access. This is especially important for when it’s time to update a docker image. The container is immutable, remember? When the developer wants to update the application they update the docker file, and generate a new docker image with the changes.

Then, you pull the new image, restart the container, then you’re up and running with the new code. If you properly set the volumes for your config settings, then it’s largely transparent to you.

We’ll cover this in more detail in Part2, as we’ll be installing docker and getting things setup.

Until next time.